Custom Query (986 matches)

Results (616 - 618 of 986)

| Ticket | Owner | Reporter | Resolution | Summary |

|---|---|---|---|---|

| #744 | Done | Added several colormaps and other Matlab scripts | ||

| Description |

There are several new colormap functions that are now located in the new matlab/colormaps sub-directory:

The following functions were added for colormap processing:

Other miscellaneous functions:

|

|||

| #745 | Done | Updated Matlab Colormaps | ||

| Description |

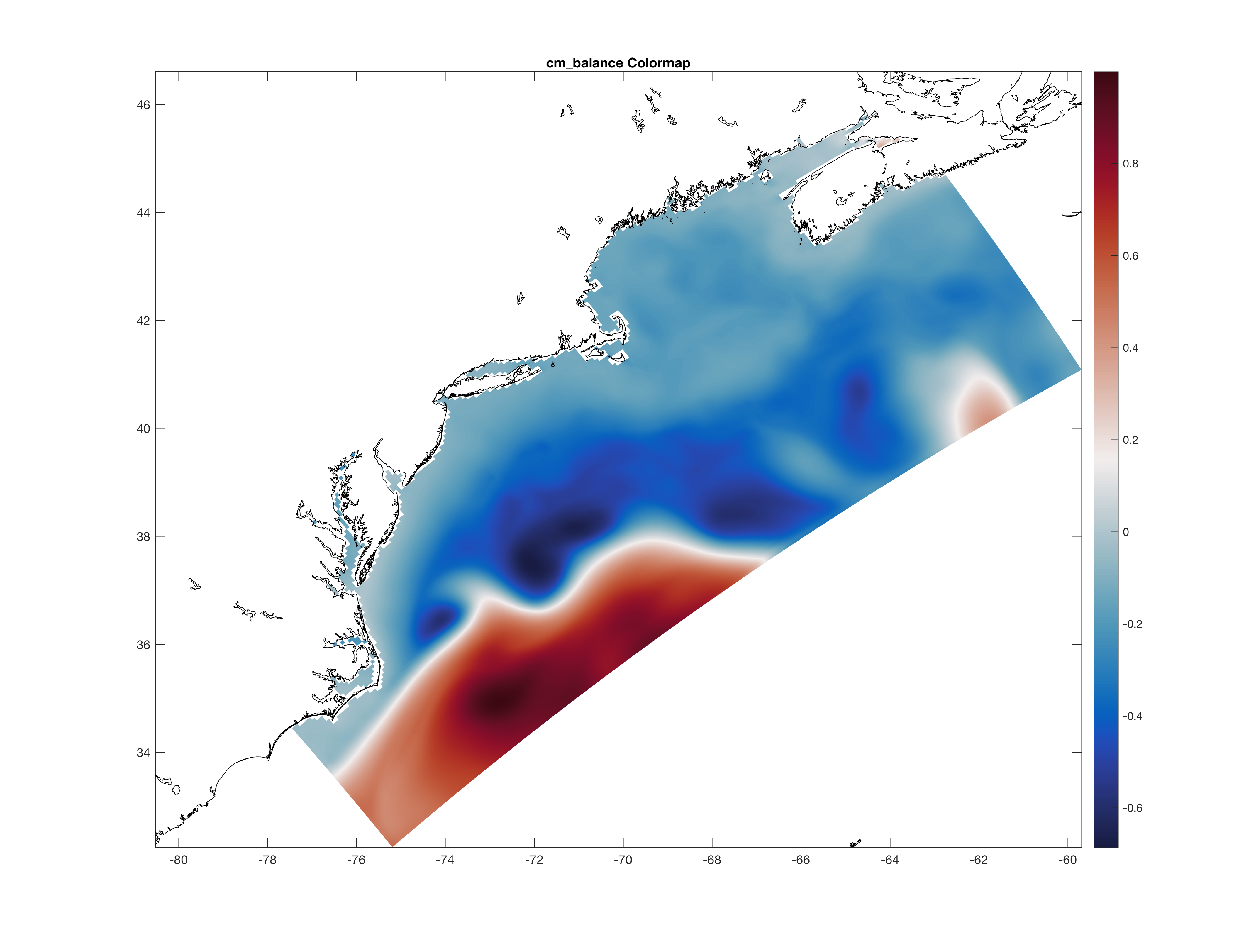

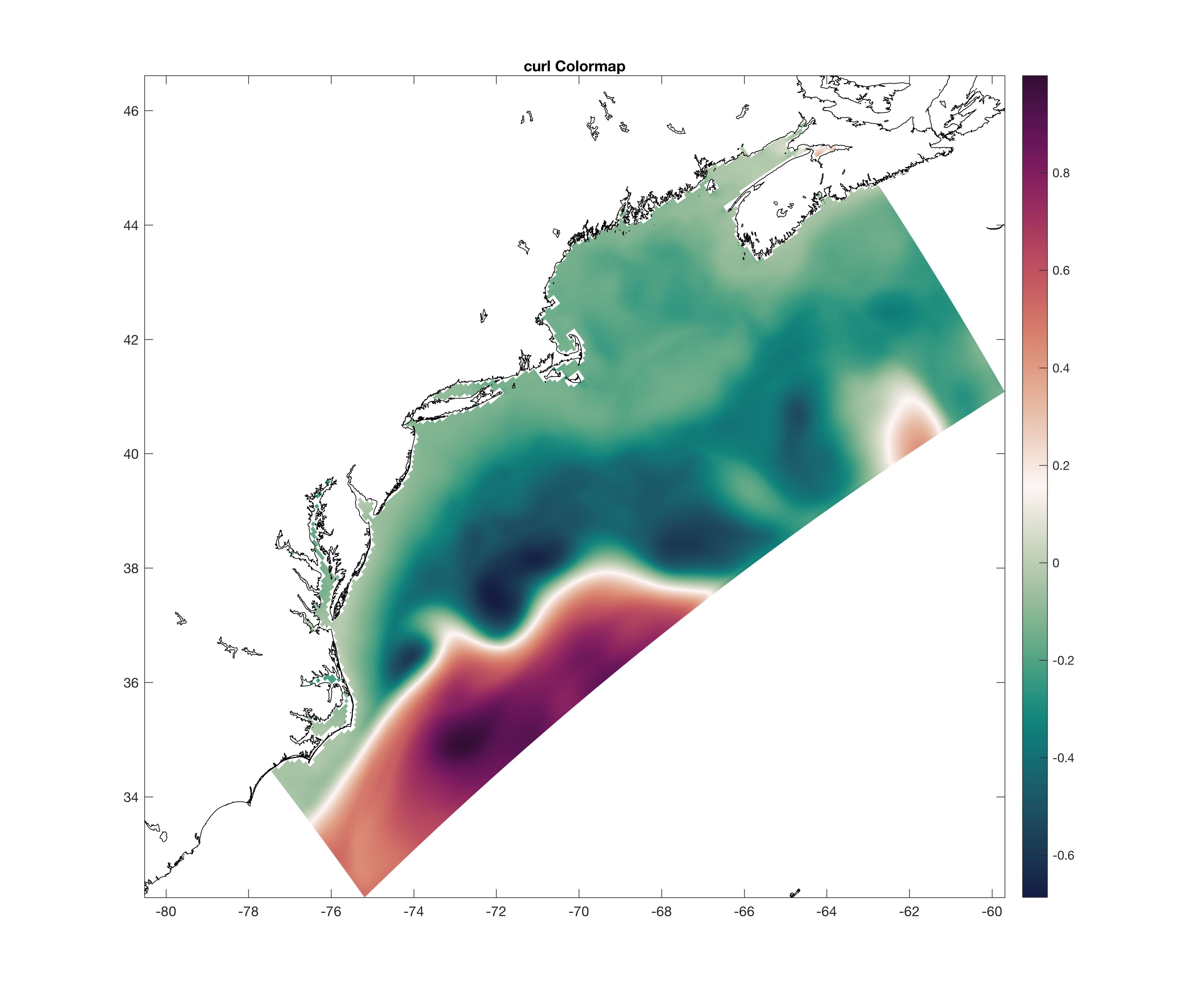

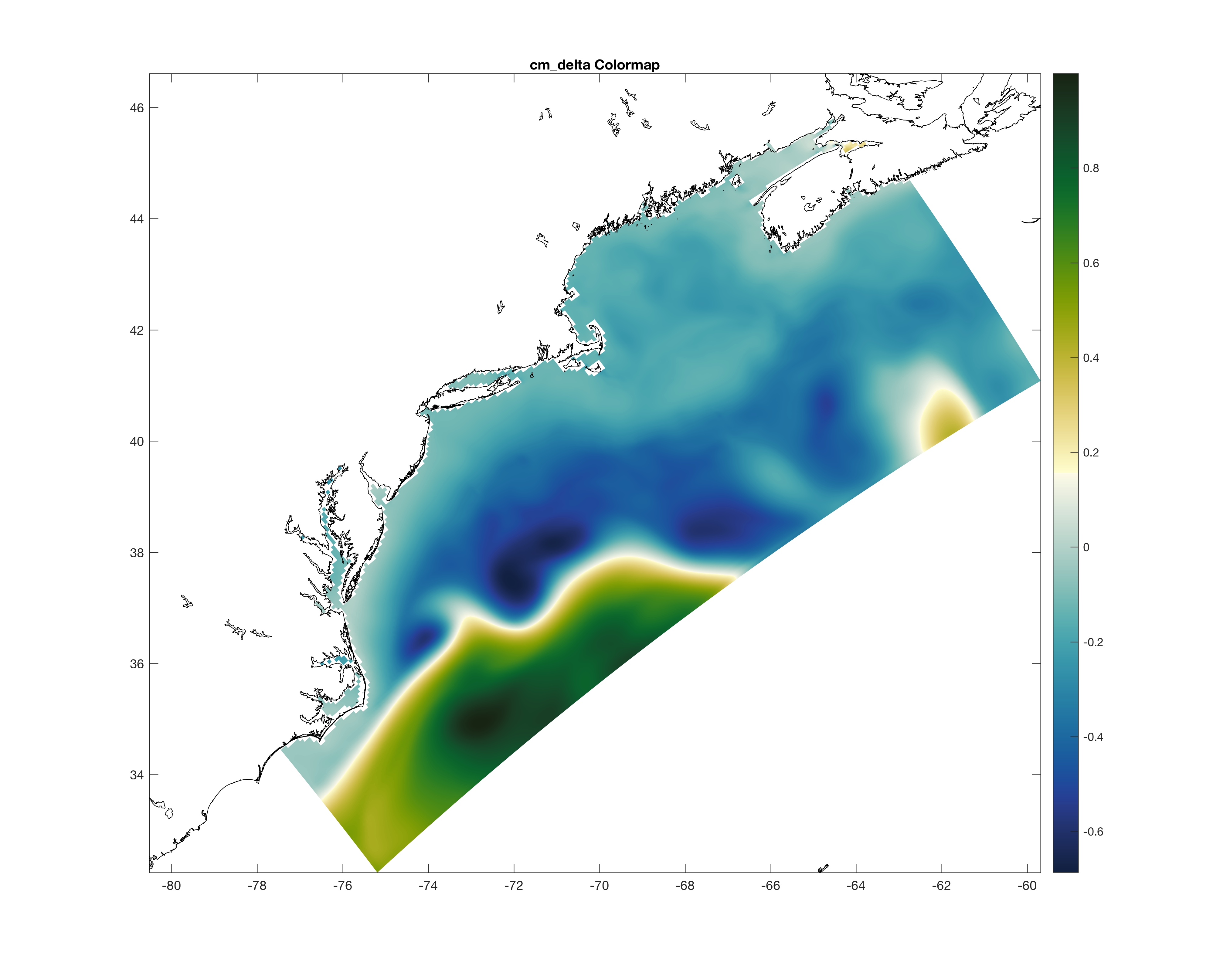

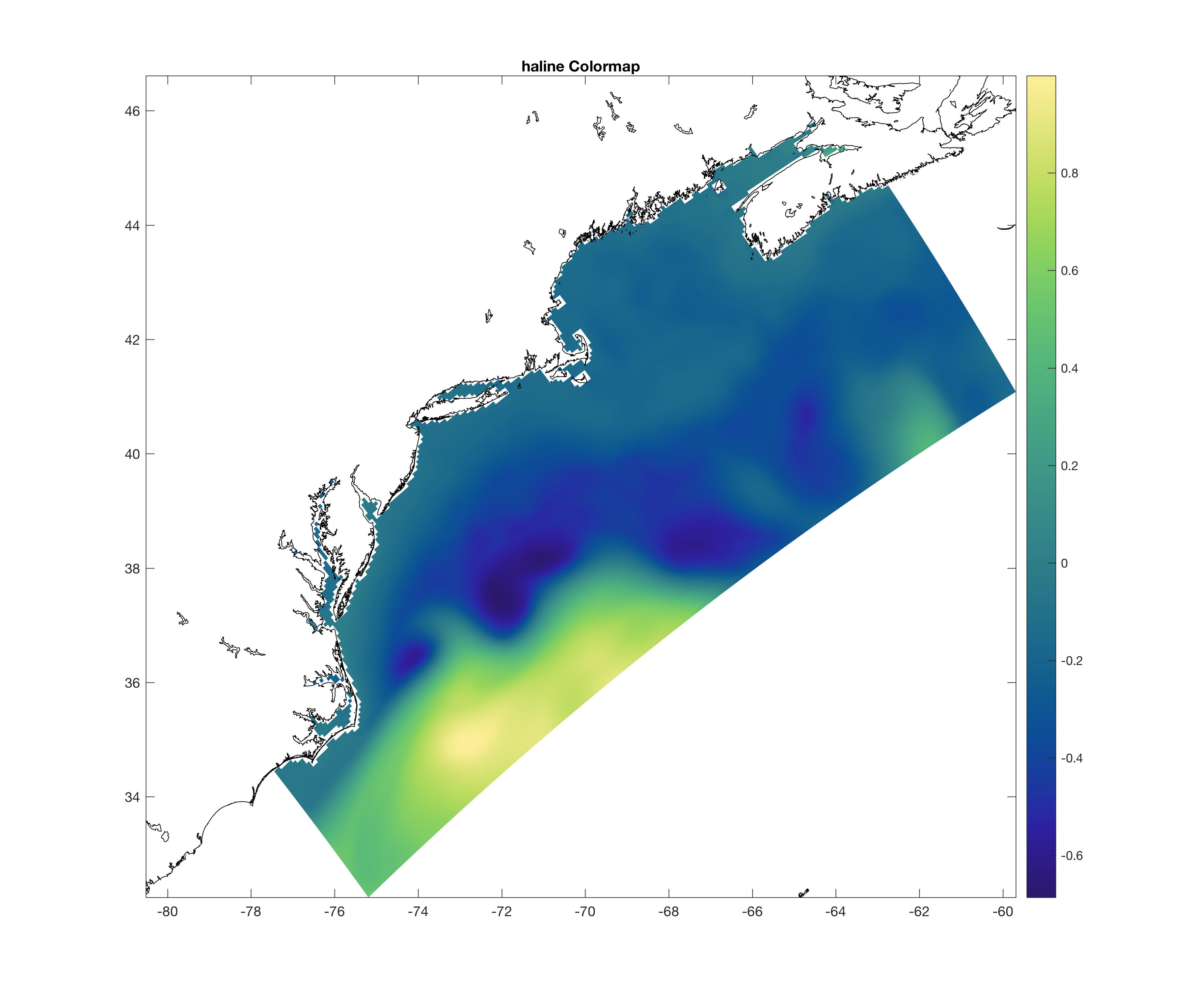

The color maps released on src:ticket:744 were modified to allow interpolation according to the number of colors requested. All the color maps have 256 values, except cm_delta with 512 values. All the color maps have an optional input argument for the number of colors desired. For example, cm_balance.m has: % CM_BALANCE: 256 color palette from CMOCEAN % % cmap = cm_balance(M) % % BALANCE colormap by Kristen Thyng. % % On Input: % % M Number of colors (integer, OPTIONAL) % % On Ouput: % % cmap Mx3 colormap matrix % % Usage: % % colormap(cm_balance) % colormap(flipud(cm_balance)) % % https://github.com/matplotlib/cmocean/tree/master/cmocean/rgb % % Thyng, K.M., C.A. Greene, R.D. Hetland, H.M. Zimmerle, and S.F DiMarco, 2016: % True colord of oceanography: Guidelines for effective and accurate colormap % selection, Oceanography, 29(3), 9-13, http://dx.doi.org/10.5670/oceanog.2016.66 % If we want to overwrite the default number of colors, we can use for example: >> colormap(cm_balance(128));

or equivalent

>> cmocean('balance', 128);

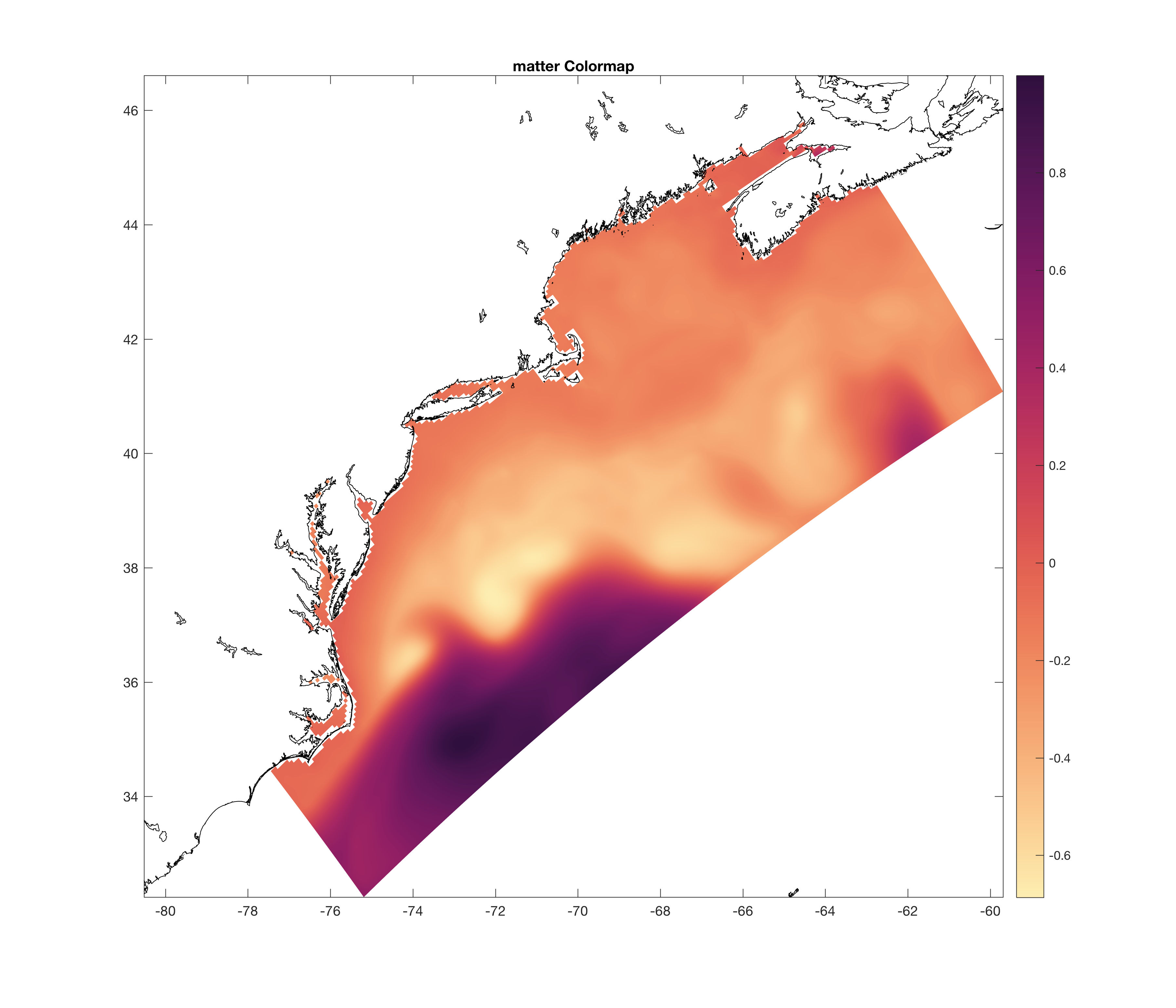

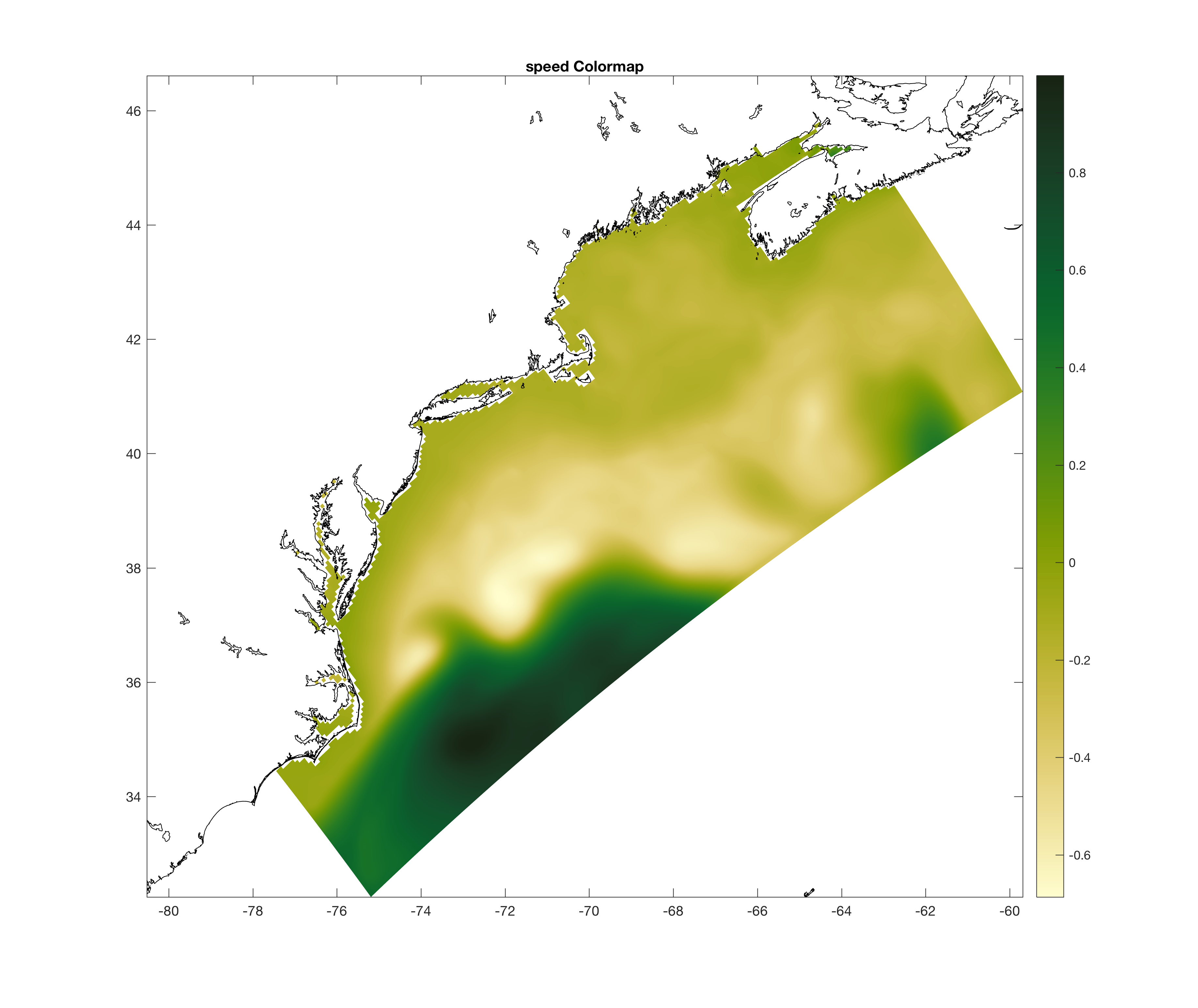

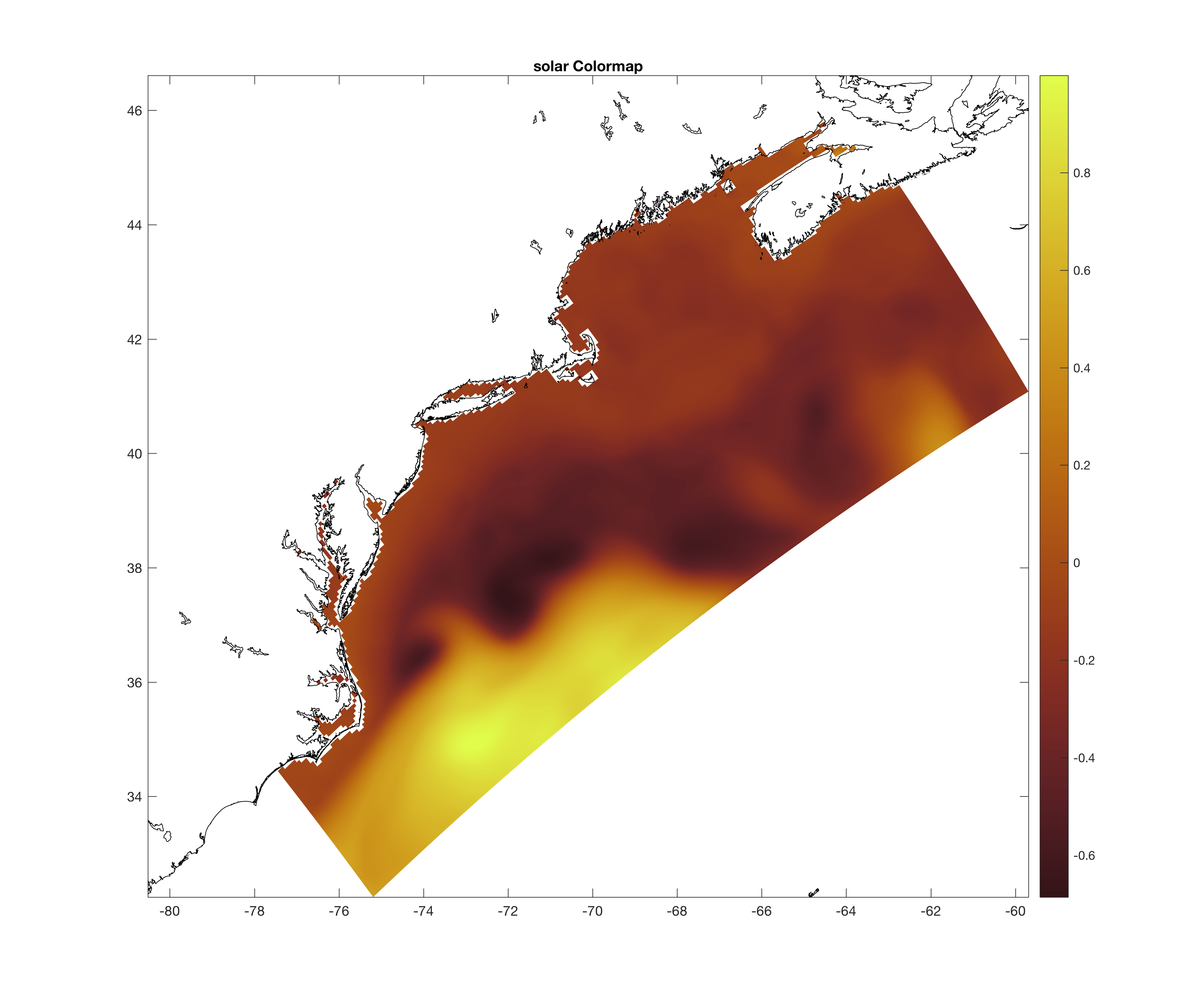

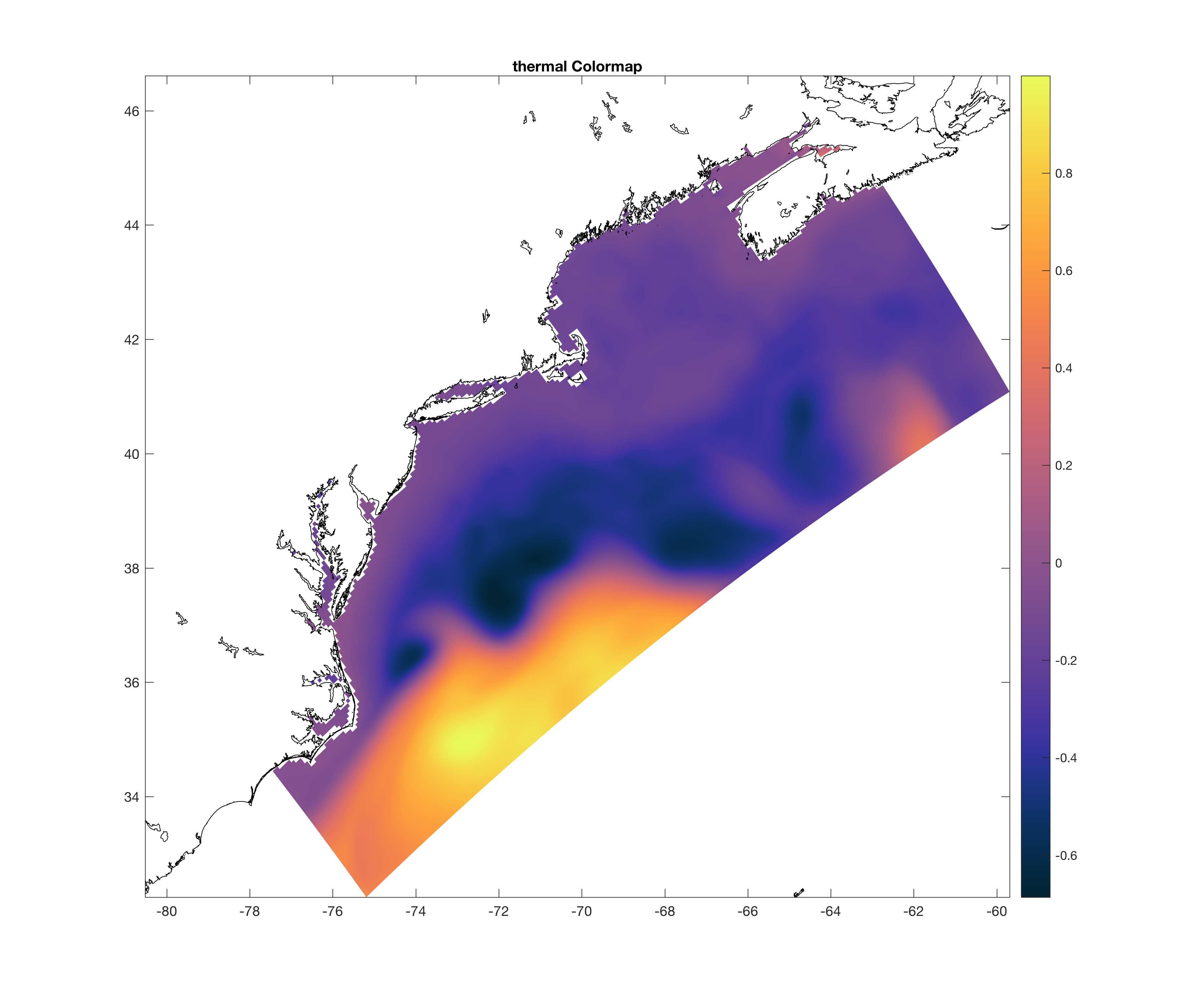

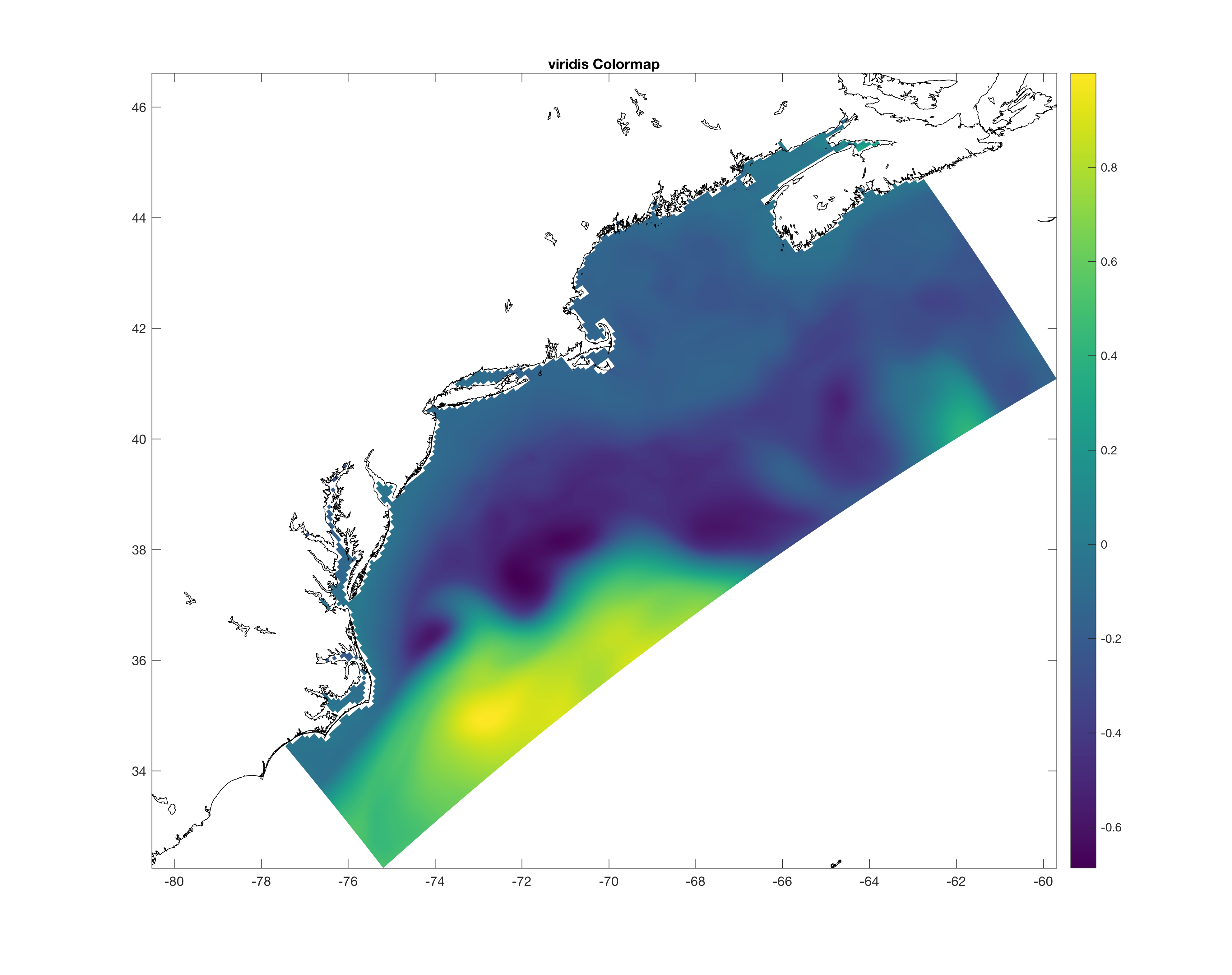

notice that in cmocean the cm_ prefix is dropped. Read documentation by issuing the help command in Matlab. Here are some of the examples with various color maps: cm_balance:cm_curl:cm_delta:cm_haline:cm_matter:cm_speed:cm_solar:cm_thermal:viridis: |

|||

| #746 | Done | Updated ROMS Plotting Package | ||

| Description |

I updated few things in the ROMS plotting package which uses the NCAR/GKS library:

|

|||