Custom Query (986 matches)

Results (769 - 771 of 986)

| Ticket | Owner | Reporter | Resolution | Summary |

|---|---|---|---|---|

| #745 | Done | Updated Matlab Colormaps | ||

| Description |

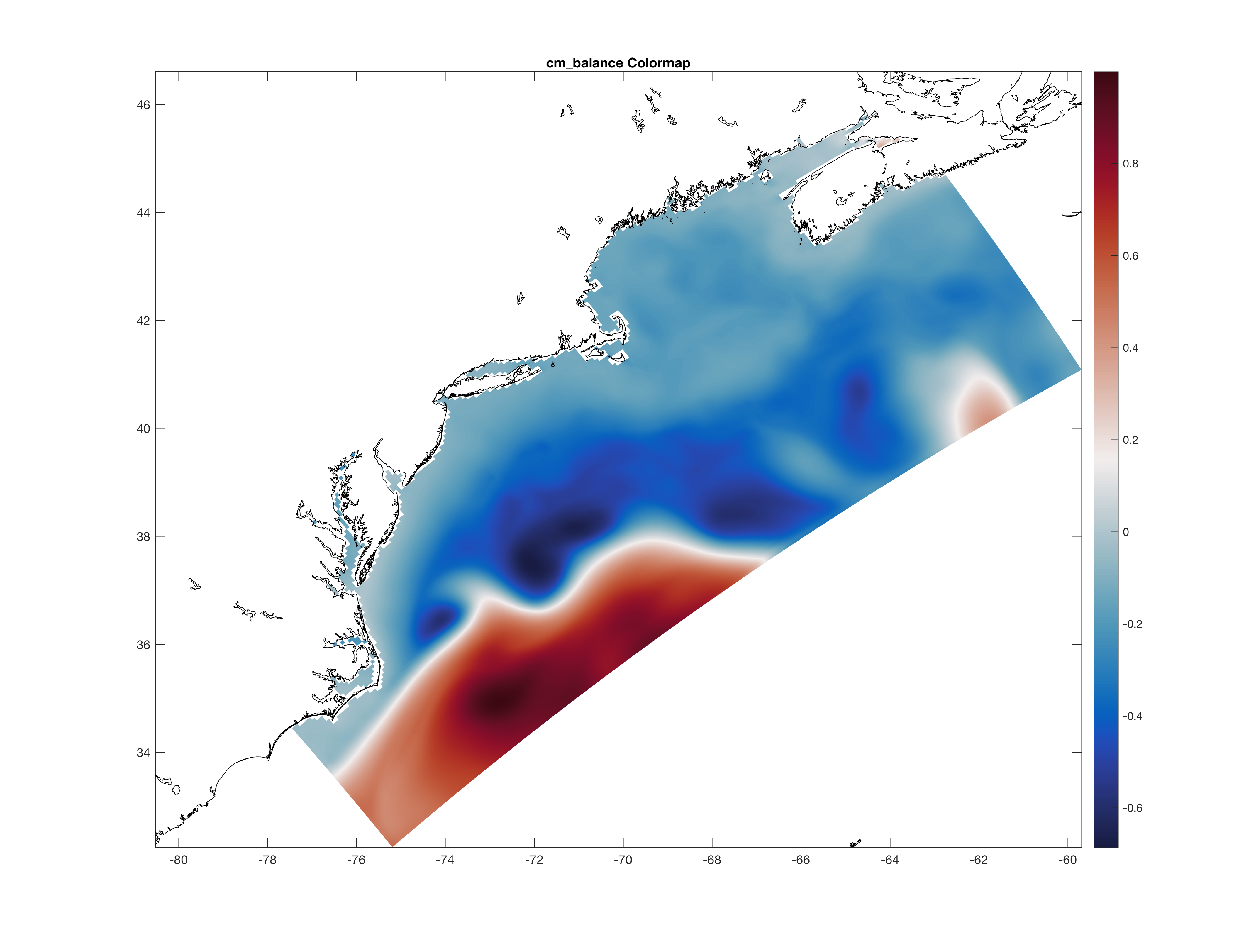

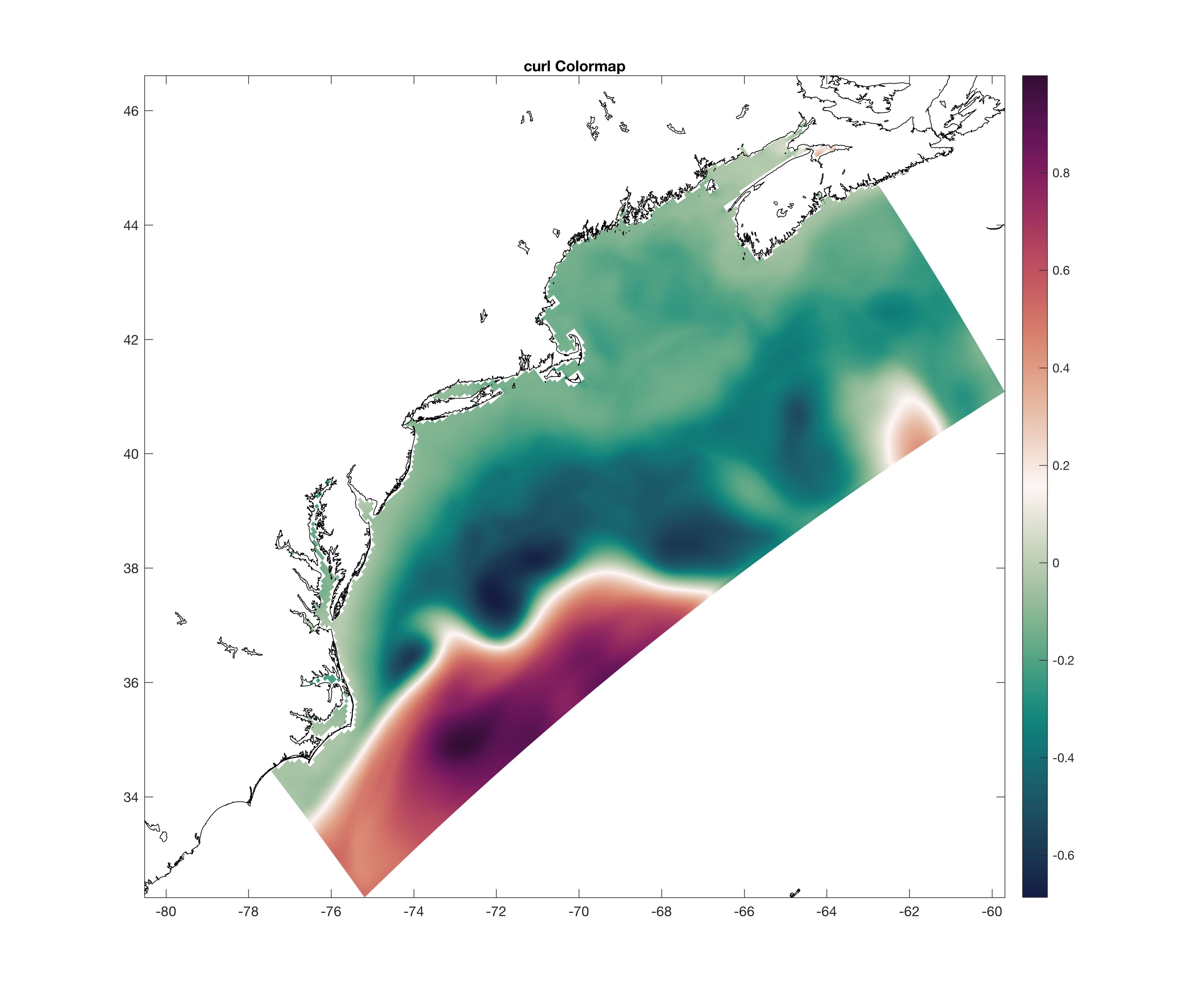

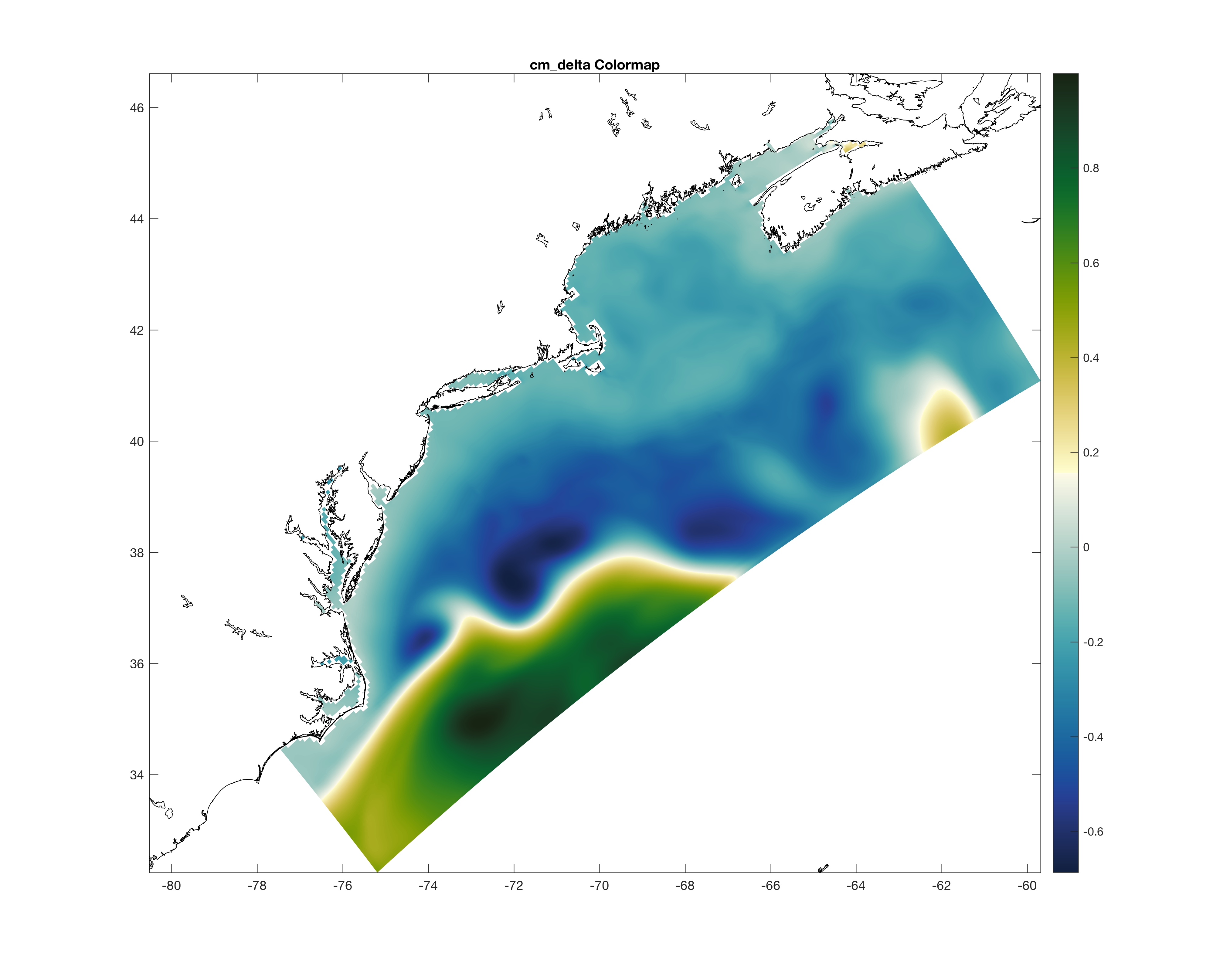

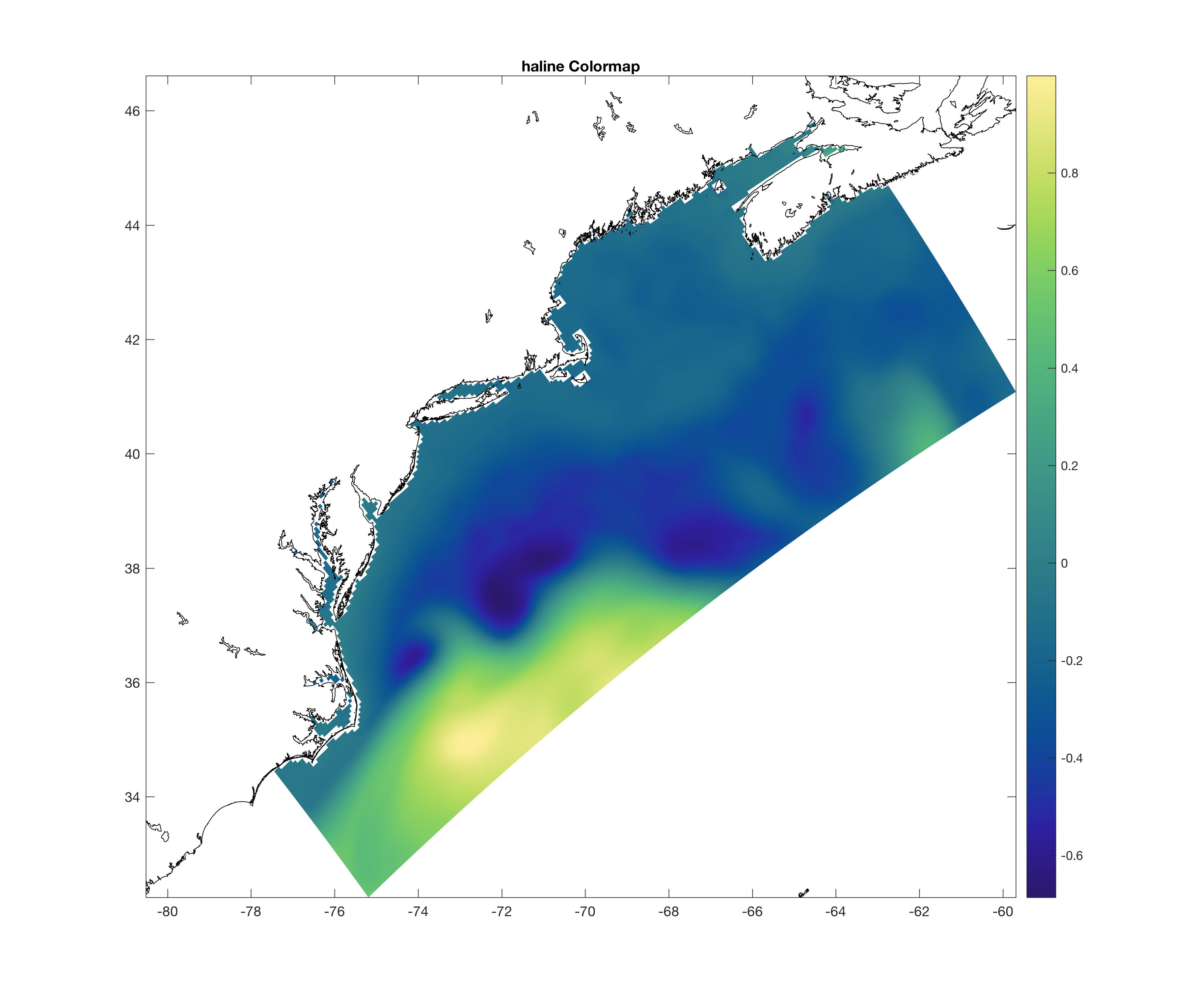

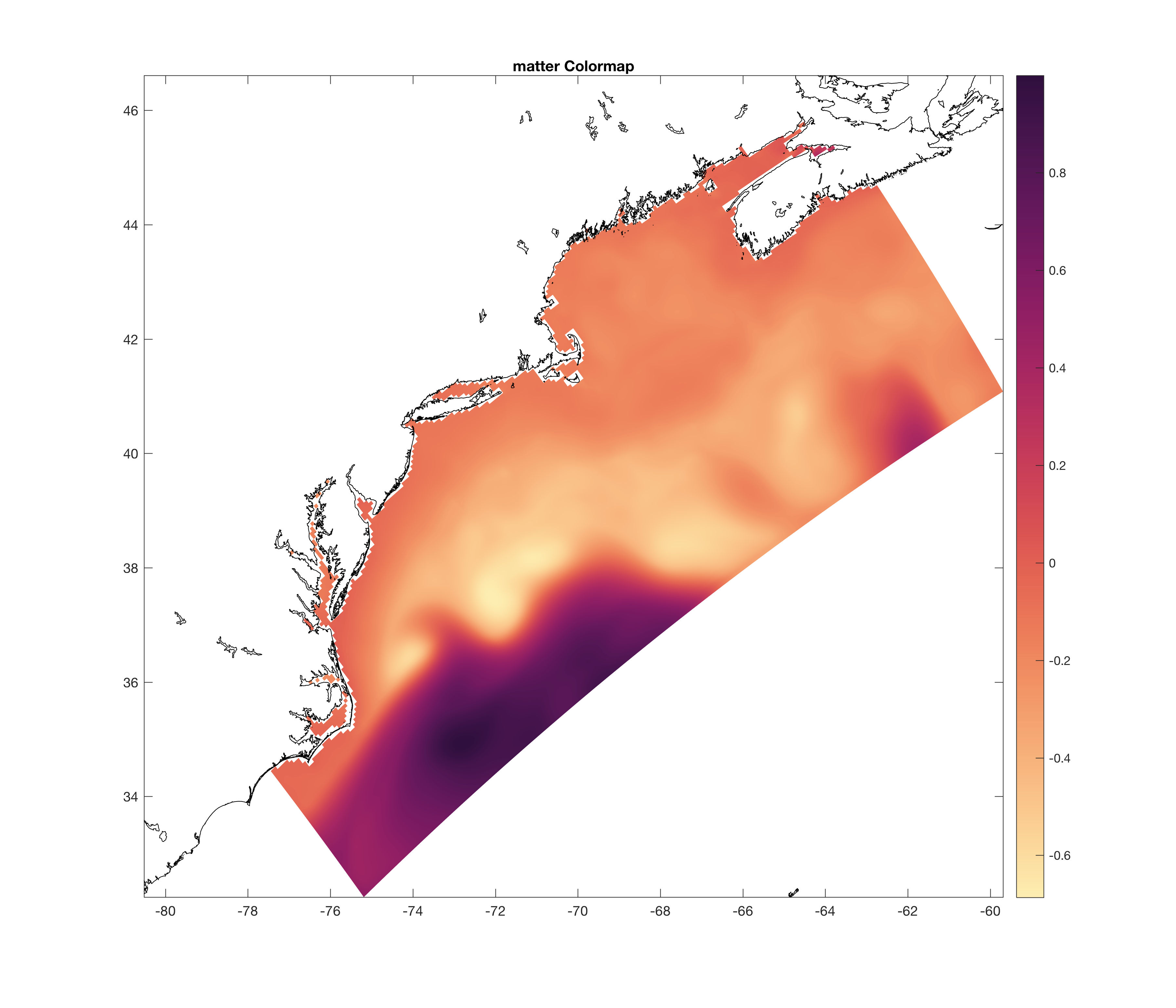

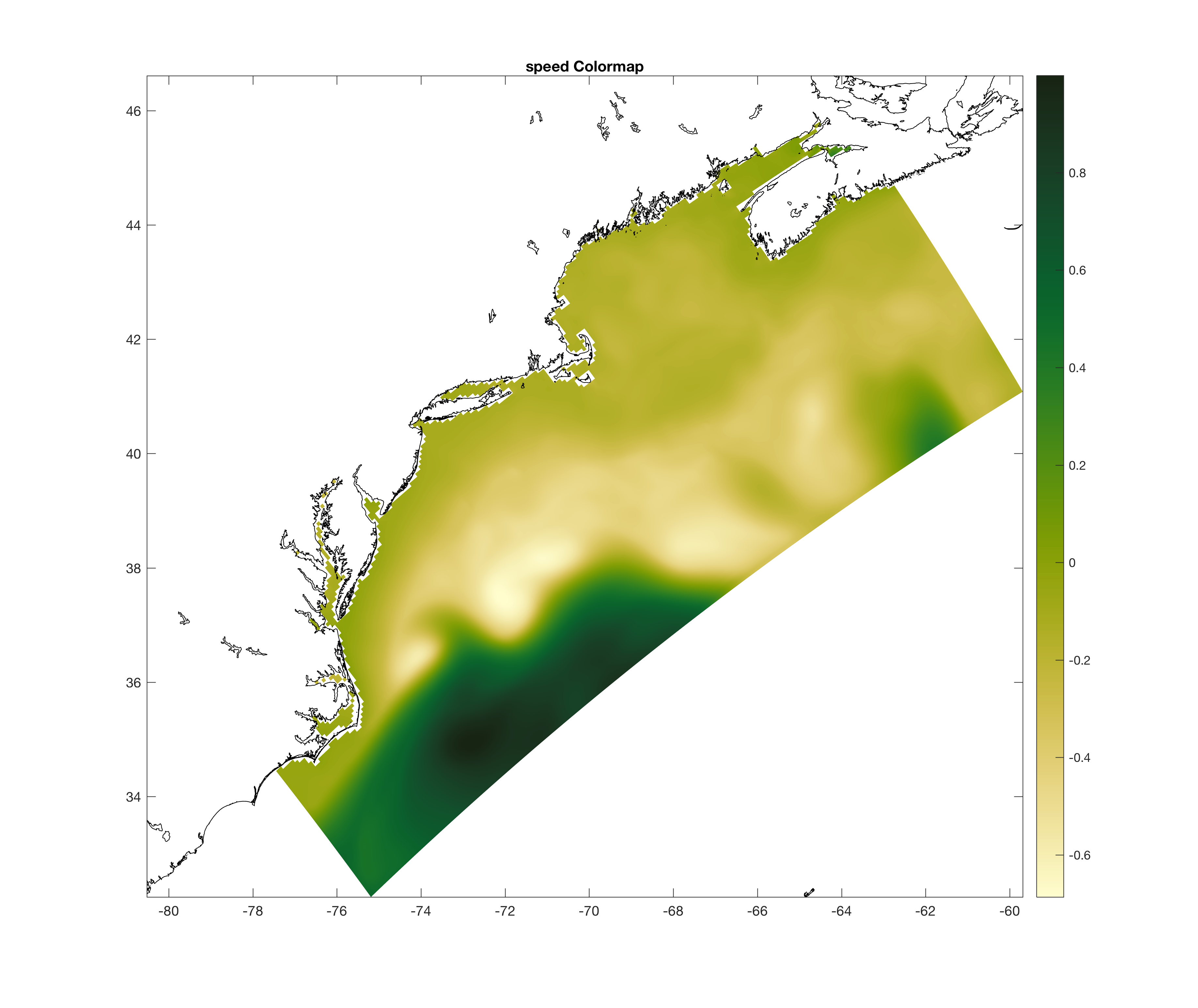

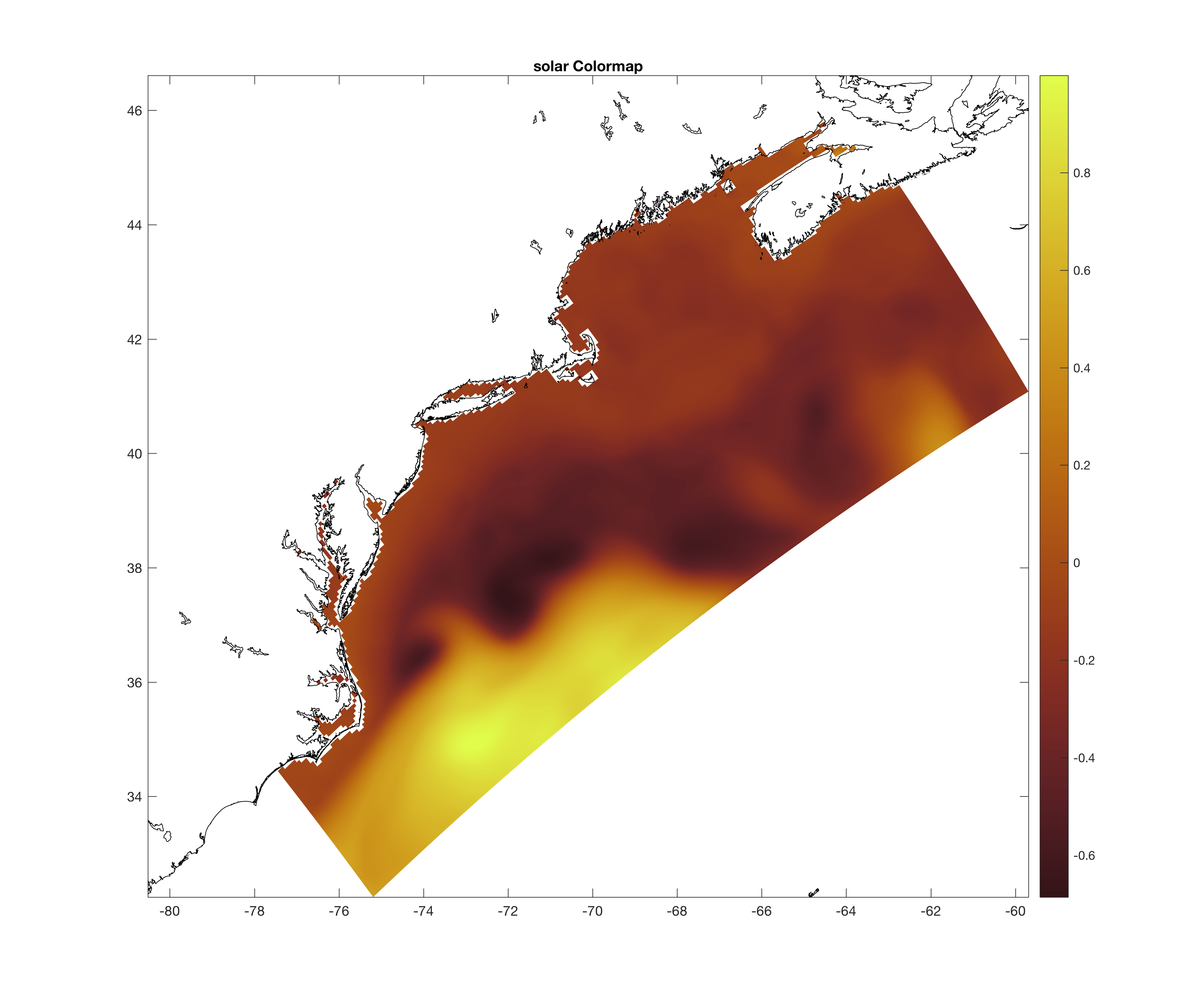

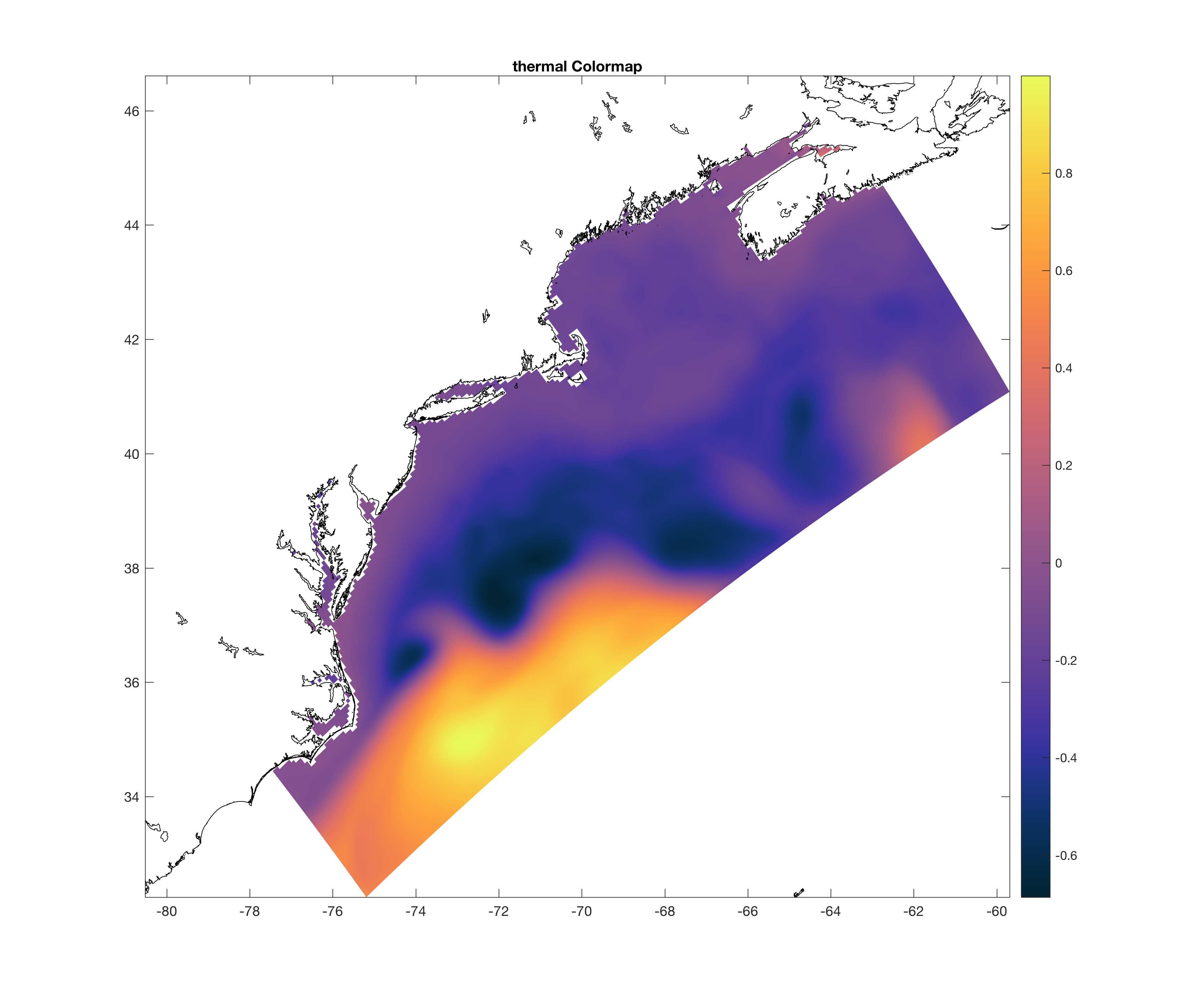

The color maps released on src:ticket:744 were modified to allow interpolation according to the number of colors requested. All the color maps have 256 values, except cm_delta with 512 values. All the color maps have an optional input argument for the number of colors desired. For example, cm_balance.m has: % CM_BALANCE: 256 color palette from CMOCEAN % % cmap = cm_balance(M) % % BALANCE colormap by Kristen Thyng. % % On Input: % % M Number of colors (integer, OPTIONAL) % % On Ouput: % % cmap Mx3 colormap matrix % % Usage: % % colormap(cm_balance) % colormap(flipud(cm_balance)) % % https://github.com/matplotlib/cmocean/tree/master/cmocean/rgb % % Thyng, K.M., C.A. Greene, R.D. Hetland, H.M. Zimmerle, and S.F DiMarco, 2016: % True colord of oceanography: Guidelines for effective and accurate colormap % selection, Oceanography, 29(3), 9-13, http://dx.doi.org/10.5670/oceanog.2016.66 % If we want to overwrite the default number of colors, we can use for example: >> colormap(cm_balance(128));

or equivalent

>> cmocean('balance', 128);

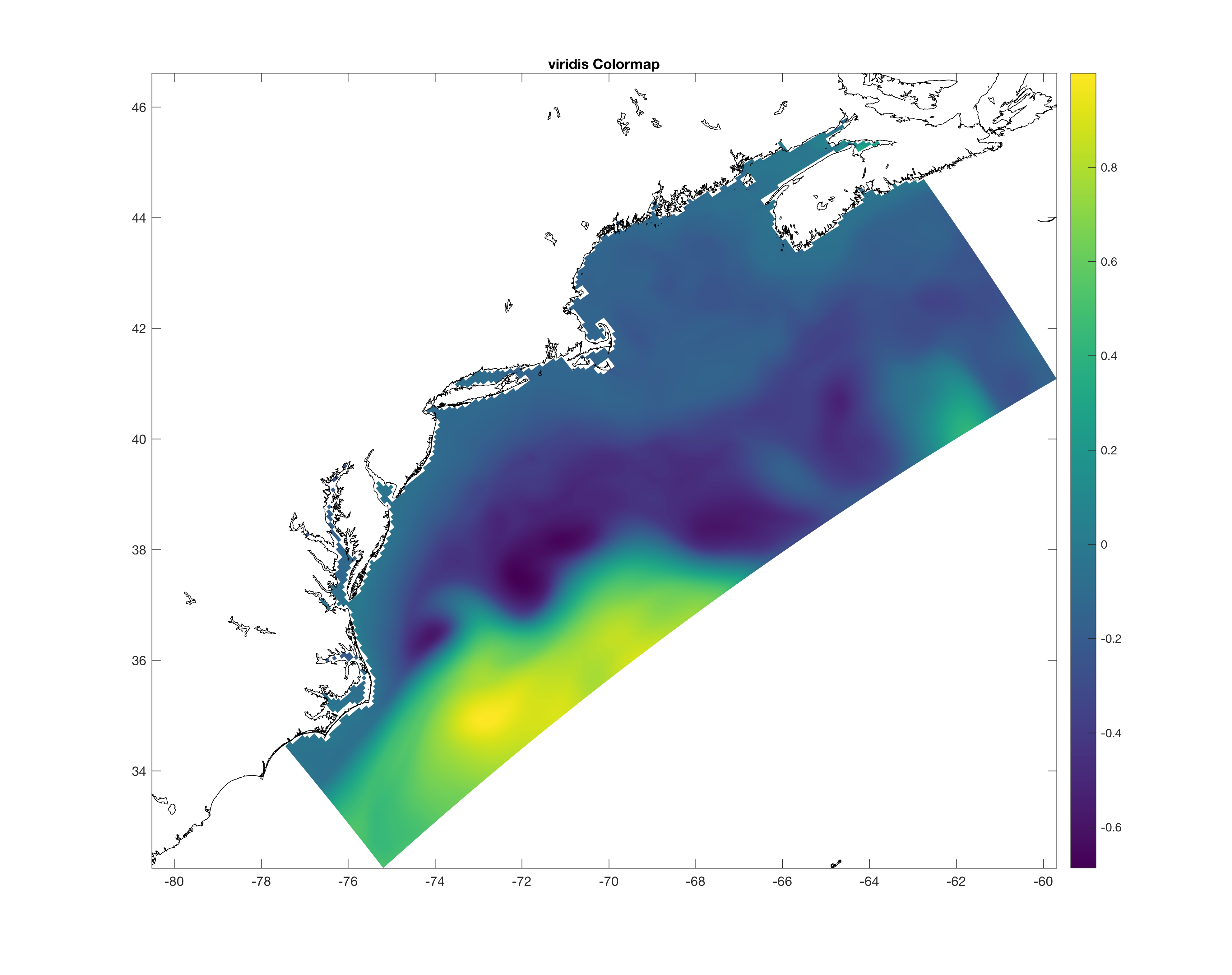

notice that in cmocean the cm_ prefix is dropped. Read documentation by issuing the help command in Matlab. Here are some of the examples with various color maps: cm_balance:cm_curl:cm_delta:cm_haline:cm_matter:cm_speed:cm_solar:cm_thermal:viridis: |

|||

| #746 | Done | Updated ROMS Plotting Package | ||

| Description |

I updated few things in the ROMS plotting package which uses the NCAR/GKS library:

|

|||

| #747 | Done | Important: Updating MPI communication options and NetCDF-4 Parallel I/O | ||

| Description |

This update is very important because it includes changes that allow the user to select the most appropriate MPI library routines for ROMS distribute.F internal message-passing routines: mp_assemble, mp_boundary, mp_collect, and mp_reduce. We can either use high-level (mpi_allgather or mpi_allreduce) or low-level (mpi_isend and mpi_irecv) library routines. In some computers, the low-level routines are more efficient than the high-level routines or vice-versa. There are proprietary MPI libraries offered by the supercomputer and cluster vendors that are optimized and tuned to the computer architecture resulting in very efficient high-order communication routines. In the past, we decided for you and selected the communication methodology. In this update, we no longer make such selections and provide the user with several new C-preprocessing options to choose the appropriate methodology for your computer architecture. The user needs to benchmark their computer(s) and select the most efficient strategy. Notice that when mpi_allreduce is used, a summation reduction is implied. The array data is stored in a global array (same size) in each parallel tile. The global array is initialized to zero always, and then each MPI node only operates on a different portion of it. Therefore, the computations are carried out by each node; a summation reduction is equivalent to each tile gathering data from other members of the MPI communicator. Affected communication routines:

If you want to use the same methodology as in previous versions of the code, check your version of the routine distribute.F and you will see something similar to: #include "cppdefs.h"

MODULE distribute_mod

#ifdef DISTRIBUTE

# undef ASSEMBLE_ALLGATHER /* use mpi_allgather im mp_assemble */

# undef ASSEMBLE_ALLREDUCE /* use mpi_allreduce in mp_assemble */

# define BOUNDARY_ALLREDUCE /* use mpi_allreduce in mp_boundary */

# undef COLLECT_ALLGATHER /* use mpi_allgather in mp_collect */

# undef COLLECT_ALLREDUCE /* use mpi_allreduce in mp_collect */

# define REDUCE_ALLGATHER /* use mpi_allgather in mp_reduce */

# undef REDUCE_ALLREDUCE /* use mpi_allreduce in mp_reduce */

...

Activate the same C-preprocessing options in your header (*.h) or build script file if you want to use the same set-up. Usually, in my applications I activate: #define COLLECT_ALLREDUCE #define REDUCE_ALLGATHER which reports to standard output the following information: !ASSEMBLE_ALL... Using mpi_isend/mpi_recv in mp_assemble routine. ... !BOUNDARY_ALLGATHER Using mpi_allreduce in mp_boundary routine. ... COLLECT_ALLREDUCE Using mpi_allreduce in mp_collect routine. ... REDUCE_ALLGATHER Using mpi_allgather in mp_reduce routine. I also I made few changes to several of the NetCDF managing routines to avoid opening too many files. The routines netcdf_check_dim and netcdf_inq_var have an additional optional argument ncid to pass the NetCDF file ID, so it is not necessary to open a NetCDF file it is already open. The second part of this update includes changes to the parallel I/O in ROMS using the NetCDF4 library. Parallel I/O only makes sense in High-Performance Computers (HPC) with the appropriate parallel file system. Otherwise, the I/O data is still processed serially with no improvement on the computation speed up. In the NetCDF-4 library, the parallel I/O is either done in independent mode or collective mode. In independent mode, each processor accesses the data directly from the file and does not depend on or be affected by other processors. Contrarily in collective mode, all processors participate in doing IO using MPI-I/O and HDF5 for accessing of the tiled data. To have parallel I/O in ROMS you need to:

|

|||